AI Winters and Breakthroughs: Why Artificial Intelligence Has Failed—and Recovered—Multiple Times

Introduction: A Field of Repeated Collapse and Renewal

Artificial intelligence is often presented as a steady march of progress—each decade bringing machines closer to human-like intelligence. The real history is far more uneven. Periods of intense optimism have repeatedly been followed by disappointment, funding cuts, and skepticism. These downturns are known as AI winters, moments when expectations collapsed faster than the technology could mature.

Understanding why artificial intelligence has failed—and recovered—multiple times is essential for making sense of today’s renewed excitement. AI winters were not caused by a lack of intelligence or effort. They emerged from a combination of technical limits, unrealistic promises, institutional decisions, and misunderstandings about the nature of human intelligence itself.

This article explores the major AI winters, the breakthroughs that followed them, and the lessons they offer for the current era. Rather than treating AI as an unstoppable force, it takes a human-centered view—one that emphasizes judgment, policy, and responsibility alongside technological progress.

What Is an AI Winter?

An AI winter refers to a period of reduced funding, interest, and confidence in artificial intelligence research. During these times, governments and organizations scale back investment after realizing that earlier promises were not achievable within expected timelines.

AI winters are not simply moments when research stopped. Work continued, often quietly and with less visibility. What disappeared was large-scale institutional confidence—the belief that AI would soon deliver broad, human-level intelligence or major economic transformation.

These cycles of enthusiasm and disappointment are not unique to AI, but the field has experienced them more dramatically than most areas of technology.

Early Optimism and the Seeds of Disappointment

From the beginning, artificial intelligence was shaped by ambition. In the 1950s and 1960s, early researchers believed that human reasoning could be formalized into rules and symbols. Early successes—such as programs that played games or solved logical problems—created the impression that general intelligence was close.

However, these early systems operated in highly constrained environments. As researchers attempted to scale them to real-world complexity, limitations became obvious. These limitations set the stage for the first AI winter.

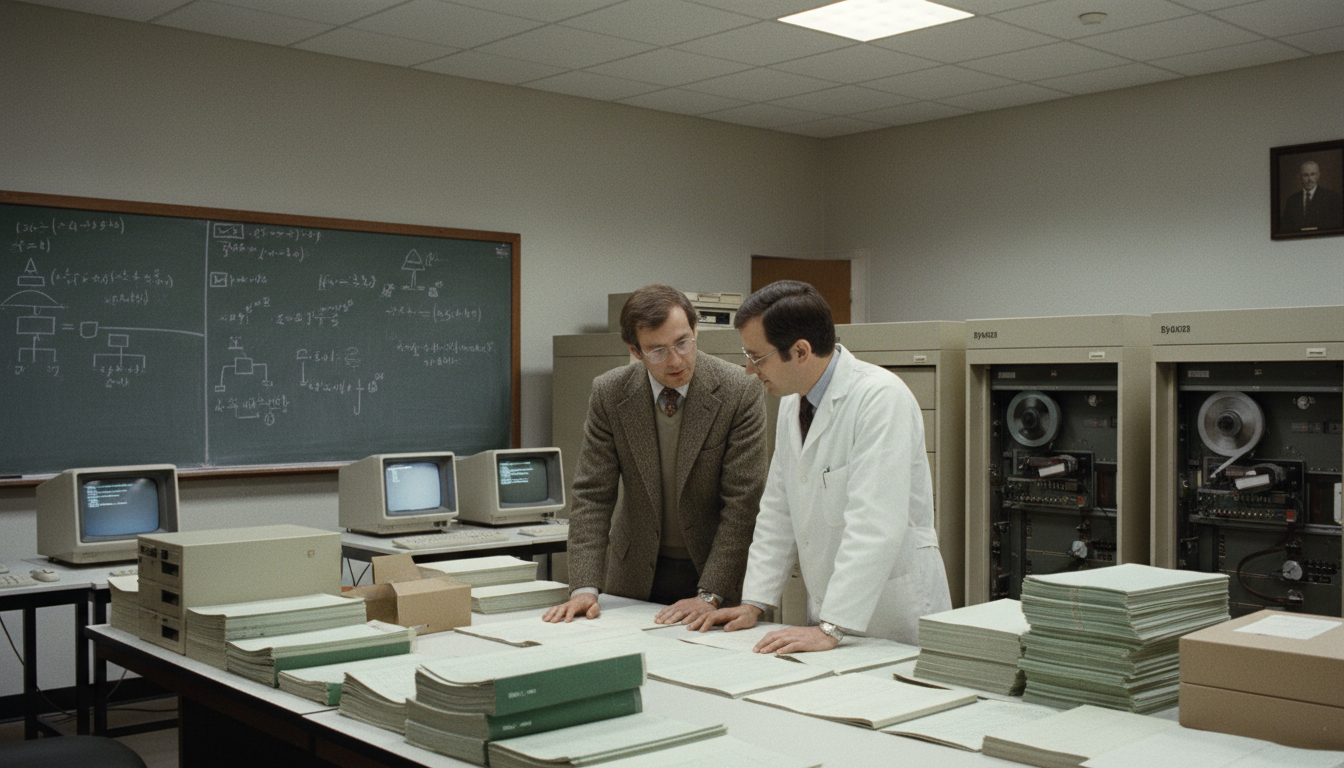

The First AI Winter (1970s): When Symbolic AI Hit Its Limits

The first major AI winter occurred in the 1970s. Governments and funding agencies had invested heavily in symbolic, rule-based AI systems. These systems showed promise in controlled tasks but failed in more complex settings.

Technical Limitations

Computers of the time lacked the memory and processing power required to handle the explosion of rules needed for real-world reasoning. Tasks such as language understanding and vision proved far more difficult than expected.

Unrealistic Expectations

Researchers had promised rapid progress toward general intelligence. When results failed to materialize, trust eroded. Funding bodies realized that timelines had been overly optimistic.

Policy and Funding Decisions

In the United Kingdom, the Lighthill Report (1973) criticized AI research for failing to meet its objectives, leading to major funding cuts. Similar skepticism emerged elsewhere.

The result was a sharp decline in investment and attention. Artificial intelligence did not disappear, but it retreated from the spotlight.

Recovery Through Narrow Applications

Despite reduced funding, AI research continued in more focused forms. Instead of general intelligence, researchers concentrated on specific tasks. This shift laid the groundwork for the next wave of optimism.

The Second AI Winter (Late 1980s): The Collapse of Expert Systems

In the 1980s, artificial intelligence experienced a resurgence through expert systems—rule-based programs designed to replicate the decision-making of specialists. Businesses adopted these systems for diagnostics, configuration, and planning.

Why Expert Systems Looked Promising

- They worked well in narrow domains

- They offered immediate commercial value

- They aligned with existing corporate decision-making models

Why They Failed to Scale

Maintaining expert systems proved expensive and fragile. Each system required constant updates as knowledge changed. Rules conflicted, systems broke, and performance degraded over time.

More importantly, expert systems could not adapt. They did not learn from experience, making them ill-suited for dynamic environments.

Economic Consequences

By the late 1980s, the cost of maintaining expert systems outweighed their benefits. Investment dried up, AI companies failed, and confidence declined again—triggering the second AI winter.

Lessons from the Second AI Winter

This period reinforced a critical insight: intelligence cannot be fully captured through static rules. Human expertise involves intuition, adaptation, and context—elements that expert systems could not replicate.

The Quiet Years and the Rise of New Ideas (1990s–Early 2000s)

The 1990s are sometimes described as a low point for AI visibility, but they were also a time of foundational progress. Researchers shifted away from rule-based approaches toward machine learning, probability, and statistics.

Instead of programming intelligence directly, systems began learning patterns from data. This approach aligned more closely with how humans learn and adapt.

However, progress was slow. Data was limited, computing power was expensive, and large-scale deployment was impractical. While the term “AI winter” is less commonly applied to the early 2000s, enthusiasm remained cautious.

What Changed: The Conditions for Recovery

Artificial intelligence did not recover because of a single breakthrough. It recovered because several enabling conditions converged.

Explosion of Data

The growth of the internet, digital services, and sensors generated vast amounts of data. This data made machine learning approaches viable at scale.

Advances in Computing Power

Affordable GPUs and cloud computing enabled large-scale training of complex models. Problems that were computationally impossible decades earlier became feasible.

Improved Algorithms

Neural networks, long dismissed as impractical, re-emerged as powerful tools when combined with data and computing power. Advances in optimization and architecture made them reliable.

Clearer Expectations

Perhaps most importantly, expectations became more grounded. Researchers focused on narrow, measurable improvements rather than human-level intelligence.

Modern AI Breakthroughs—and Familiar Risks

The 2010s marked a period of visible AI breakthroughs. Systems achieved impressive results in image recognition, speech processing, and strategic games. These successes reignited public and institutional enthusiasm.

However, echoes of earlier cycles remain.

Renewed Optimism

AI is once again described as transformative. Claims about automation, intelligence, and economic impact are widespread.

Persistent Limitations

Despite progress, modern AI systems still lack understanding, judgment, and ethical reasoning. They excel at pattern recognition but struggle with context and meaning.

Risk of Repeating History

When expectations outrun reality, the risk of another correction grows. Overpromising and underdelivering have triggered AI winters before.

Human Decision-Making and Responsibility in AI Cycles

AI winters were not caused by technology alone. They were shaped by human decisions—funding priorities, policy choices, and communication strategies.

The Role of Institutions

Governments and corporations play a central role in shaping AI trajectories. Investment decisions determine which approaches flourish and which are abandoned.

The Importance of Honest Communication

Exaggerated claims undermine trust. Historically, AI winters followed periods of inflated promises rather than steady, transparent progress.

Ethics and Accountability

As AI systems affect real lives, responsibility cannot be delegated to technology. Human oversight and governance are essential to avoid both harm and backlash.

Lessons for Today’s AI Optimism

The history of artificial intelligence shows that progress is neither linear nor inevitable. Each wave of optimism has been followed by recalibration.

Key lessons include:

- Intelligence is more complex than early models assumed

- Scaling matters as much as clever ideas

- Human judgment remains essential

- Responsible deployment sustains long-term trust

AI advances when expectations are realistic and aligned with technical reality.

Conclusion: Why AI’s Past Still Matters

Artificial intelligence has failed—and recovered—multiple times not because it is flawed, but because humans repeatedly misunderstood its nature. AI winters remind us that intelligence cannot be rushed, oversimplified, or separated from human responsibility.

Today’s AI systems are more capable than ever, yet the same fundamental truths apply. Technology progresses within human systems—economic, social, and ethical. Understanding past AI winters helps ensure that modern breakthroughs are built on realism rather than hype.

The future of AI will not be determined by algorithms alone, but by how thoughtfully people choose to invest, govern, and use them.

One thought on “AI Winters and Breakthroughs: Why Artificial Intelligence Has Failed—and Recovered—Multiple Times”