History and Evolution of Artificial Intelligence: From Early Ideas to the Modern AI Era

Introduction: What Artificial Intelligence Is and Why It Matters Today

Artificial Intelligence (AI) is no longer a distant concept found only in science fiction or academic research labs. It is embedded in everyday life—powering search engines, recommending content, assisting doctors, optimizing logistics, and increasingly shaping how people work and communicate. At its core, artificial intelligence refers to computer systems designed to perform tasks that typically require human intelligence, such as learning, reasoning, perception, and decision-making.

What makes AI particularly important today is not just its technical sophistication, but its societal impact. AI influences economies, labor markets, education systems, and even democratic processes. For individuals and businesses alike, understanding AI is no longer optional—it is foundational digital literacy. However, meaningful understanding requires more than knowing what AI can do. It requires knowing where it came from, why it developed the way it did, and what its limitations are.

This article takes a human-first approach to the history and evolution of artificial intelligence. Instead of framing AI as a replacement for human intelligence, it presents AI as a tool shaped by human goals, values, and constraints. By tracing AI’s journey—from early philosophical ideas to today’s data-driven systems—we can better understand what AI truly is, what it is not, and how it should be used responsibly in the modern world.

Early Foundations of AI (Before 1950)

Long before computers existed, humans were already thinking about artificial intelligence in philosophical and mechanical terms. Ancient myths and stories often featured artificial beings endowed with intelligence, from Greek automatons to medieval legends of golems. These narratives reflected a deep human curiosity: Can intelligence be created, or is it uniquely human?

In the 17th and 18th centuries, philosophers such as René Descartes and Gottfried Wilhelm Leibniz began exploring the nature of reasoning itself. Leibniz imagined a “calculus of thought,” a formal system where logic could be mechanized. This idea—that reasoning could follow rules—would later become foundational to AI.

The 19th century introduced early mechanical computing concepts. Charles Babbage’s Analytical Engine and Ada Lovelace’s insights into symbolic computation suggested that machines could manipulate symbols, not just numbers. Lovelace also issued an early caution: machines could only do what humans instructed them to do. This observation remains relevant today.

By the early 20th century, advances in formal logic, mathematics, and neuroscience further shaped AI’s intellectual roots. Alan Turing’s work on computation and his concept of a “universal machine” laid the groundwork for digital computers. These early foundations established a critical idea: intelligence could potentially be studied, modeled, and partially replicated through formal systems.

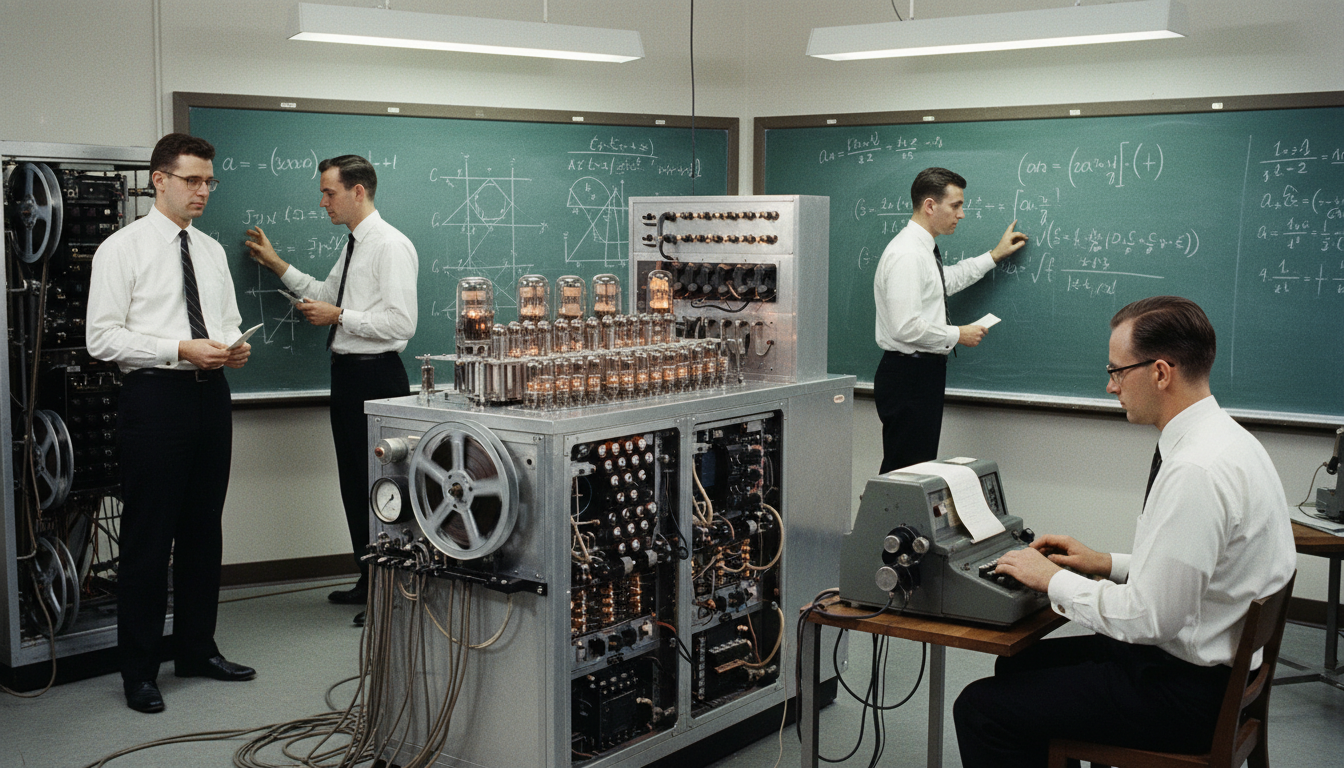

The Birth of AI as a Field (1950s–1960s)

The modern field of artificial intelligence officially began in the 1950s. In 1950, Alan Turing published his influential paper “Computing Machinery and Intelligence,” proposing what is now known as the Turing Test. Rather than defining intelligence abstractly, Turing suggested a practical benchmark: if a machine could convincingly imitate human conversation, it could be considered intelligent.

In 1956, the Dartmouth Summer Research Project on Artificial Intelligence formally coined the term “artificial intelligence.” Researchers such as John McCarthy, Marvin Minsky, Herbert Simon, and Allen Newell believed that human-level intelligence could be achieved within a few decades. Early successes fueled optimism. Programs were developed that could solve mathematical proofs, play checkers, and manipulate symbolic logic.

This era focused heavily on symbolic AI, also known as rule-based systems. Intelligence was seen as the manipulation of symbols using explicit rules. While these systems worked well in constrained environments, they struggled with real-world complexity. Still, early AI research established core concepts such as problem solving, search algorithms, and knowledge representation.

The optimism of this period was genuine, but it underestimated the complexity of human intelligence. Understanding language, perception, and common sense proved far more difficult than initially imagined.

AI Winters and Challenges (1970s–1990s)

By the 1970s, the limitations of early AI systems became increasingly clear. Rule-based systems required enormous manual effort to encode knowledge and failed when faced with uncertainty or unfamiliar situations. Promises made to governments and funding agencies went unmet.

This led to periods known as AI winters, where funding and public interest declined sharply. The first major AI winter occurred in the 1970s, followed by another in the late 1980s and early 1990s after expert systems failed to scale effectively in commercial environments.

These challenges were not failures of intelligence itself, but failures of assumptions. Researchers had underestimated the importance of data, learning, and adaptability. Human intelligence relies heavily on experience, context, and perception—areas where early AI was weakest.

Despite reduced funding, progress did not stop entirely. Important theoretical work continued in machine learning, probability theory, and neural networks. The groundwork for modern AI was quietly being laid during these difficult years.

The revival of artificial intelligence began in the late 1990s and early 2000s, driven by three transformative forces: data, computing power, and connectivity.

First, the rise of the internet generated massive amounts of digital data—text, images, video, and user behavior. Second, computing hardware became faster and cheaper, with graphical processing units (GPUs) enabling parallel computation. Third, global connectivity allowed researchers to share data, models, and results at unprecedented scale.

Machine learning, particularly statistical approaches, gained prominence. Instead of hand-coding rules, systems learned patterns from data. This shift marked a fundamental change in AI development philosophy: from explicit programming to data-driven learning.

Notable milestones included IBM’s Deep Blue defeating chess champion Garry Kasparov in 1997 and the increasing success of machine learning in speech recognition, recommendation systems, and search engines.

This period demonstrated that AI systems could achieve practical value when paired with large datasets and well-defined objectives.

Modern AI Era (Machine Learning, Deep Learning, Generative AI)

The modern AI era is defined by machine learning and, more recently, deep learning. Deep neural networks—loosely inspired by the human brain—enabled dramatic improvements in image recognition, natural language processing, and game-playing systems.

Breakthroughs such as ImageNet, AlphaGo, and transformer-based language models reshaped public perception of AI. Generative AI systems can now produce text, images, music, and code, often at near-human levels of fluency.

However, these systems do not “understand” in a human sense. They identify statistical patterns in data and generate outputs based on probabilities. Their apparent intelligence is a reflection of data scale and algorithmic sophistication, not consciousness or intent.

This distinction is crucial for maintaining a realistic, human-first perspective on AI.

How AI Works Today (High-Level Explanation)

Modern AI systems typically operate through the following process:

- Data Collection – Large datasets are gathered from relevant domains.

- Training – Algorithms learn patterns by adjusting internal parameters.

- Evaluation – Models are tested for accuracy, bias, and robustness.

- Deployment – Systems are integrated into real-world applications.

- Monitoring – Performance is continuously assessed and updated.

AI does not reason like humans. It does not possess self-awareness, values, or moral judgment. It operates within the constraints defined by human designers, data sources, and objectives.

Limitations of AI: Why AI Cannot Replace Humans

Despite impressive capabilities, AI has fundamental limitations. It lacks genuine understanding, emotional intelligence, ethical reasoning, and contextual awareness. AI systems cannot define goals independently or understand consequences beyond their training data.

Bias in data can lead to biased outcomes. Over-reliance on AI can also create risks, especially in high-stakes domains such as healthcare, law, and governance.

These limitations highlight why AI should be viewed as an augmentation tool, not a replacement for human judgment.

Human-First Approach: Humans and AI Working Together

A human-first approach to AI prioritizes human values, oversight, and accountability. AI excels at processing large volumes of information quickly, while humans excel at creativity, empathy, and ethical reasoning.

When combined effectively, humans and AI can achieve outcomes neither could accomplish alone. Examples include decision support systems, assistive technologies, and productivity tools that enhance—not replace—human expertise.

Future of AI with Responsible and Human-Centered Use

The future of AI depends less on technical capability and more on governance, education, and ethical design. Responsible AI requires transparency, fairness, privacy protection, and clear accountability.

Human-centered AI design ensures that technology serves human needs rather than shaping them unconsciously. This approach aligns innovation with long-term societal well-being.

Conclusion: What This Means for Individuals and Businesses

Understanding the history and evolution of artificial intelligence provides clarity in an age of rapid change. AI is not an autonomous force—it is a human-created tool shaped by choices, incentives, and values.

For individuals, AI literacy empowers informed decision-making. For businesses, a human-first AI strategy builds trust, resilience, and sustainable innovation.

The future of AI is not about machines replacing humans. It is about humans deciding how technology should serve society—intelligently, ethically, and responsibly.

2 thoughts on “History and Evolution of Artificial Intelligence: From Early Ideas to the Modern AI Era”