From Symbolic AI to Machine Learning: Why Early AI Failed to Scale

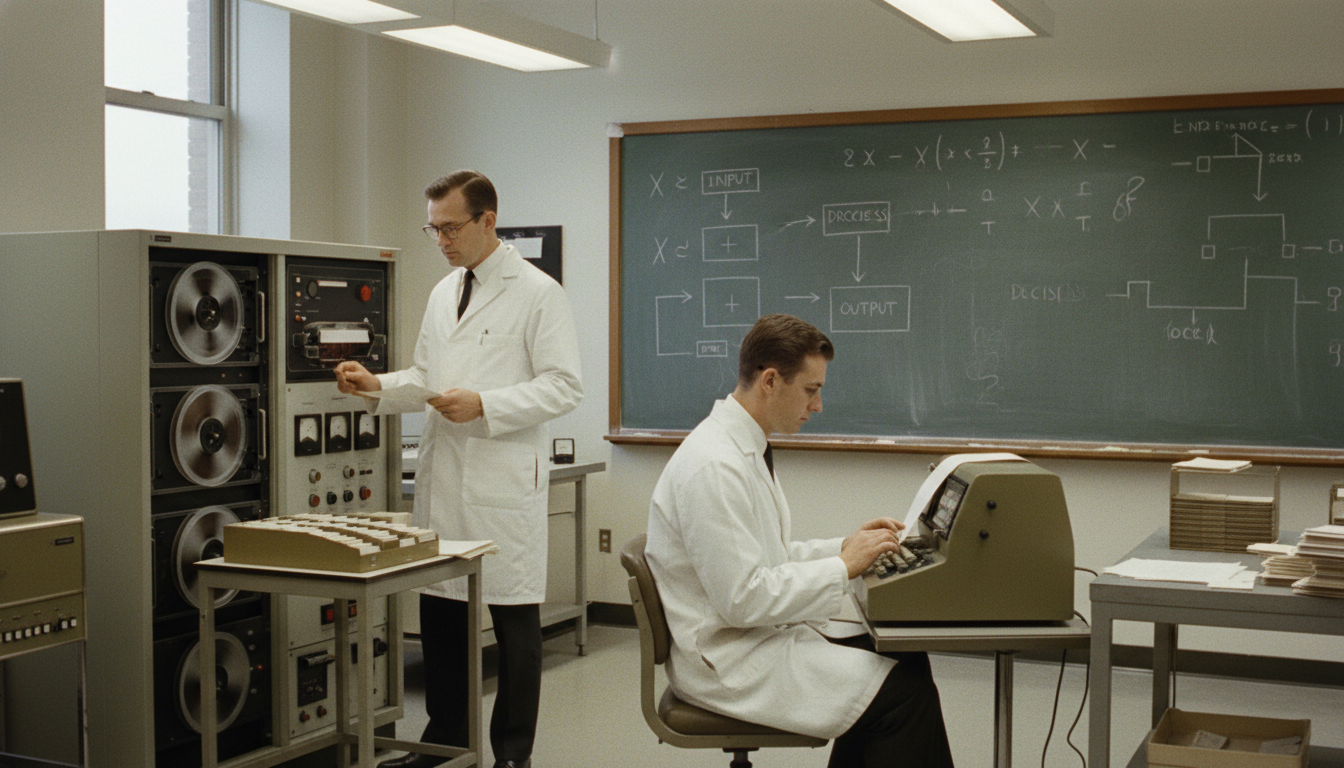

In the early days of artificial intelligence, many researchers believed they had found the right path. By teaching computers explicit rules—much like instructions written in a manual—they hoped to replicate human reasoning. This approach, known as symbolic AI, initially showed promise. Early systems could solve logic puzzles, prove mathematical theorems, and mimic expert decision-making in narrow domains.

At the time, symbolic AI looked like a clear route toward intelligent machines. If human thinking followed logical steps, then encoding those steps into software seemed reasonable. Yet as these systems were applied to more complex, real-world problems, their limitations became increasingly obvious. Understanding why symbolic AI failed to scale helps explain why modern AI took a very different direction.

What Symbolic AI and Rule-Based Systems Tried to Do

Symbolic AI is based on the idea that intelligence can be represented through symbols and rules. In practice, this meant building systems that followed “if–then” logic. For example: if a patient has symptom A and symptom B, then diagnose condition C.

These rule-based artificial intelligence systems relied on logic engines that applied predefined rules to structured information. One well-known application was the expert system—software designed to replicate the decision-making of human specialists in areas like medicine or engineering.

In controlled environments, symbolic AI worked reasonably well. When problems were narrow, stable, and well-defined, rules could capture much of the required knowledge. This success fueled optimism and shaped much of early AI research.

Why Symbolic AI Failed to Scale

As researchers pushed symbolic AI beyond small, well-structured problems, fundamental weaknesses emerged. These weaknesses were not minor technical issues—they were structural limitations.

Rigid Rules and Lack of Flexibility

Rule-based systems depend entirely on predefined logic. If a situation falls outside the rules, the system fails. Real-world environments, however, are rarely predictable. Humans adapt easily when conditions change; symbolic AI could not.

Every new scenario required new rules, and updating systems became slow and fragile. Small changes often caused unexpected failures elsewhere, making systems difficult to maintain at scale.

Inability to Handle Uncertainty

Human reasoning is comfortable with uncertainty. People make judgments with incomplete information and revise decisions as new evidence appears. Symbolic AI struggled with this.

Early systems expected clean, unambiguous inputs. When data was noisy, contradictory, or incomplete—which is common in real life—the systems either produced incorrect results or stopped working altogether. This limitation highlighted one of the most serious early AI limitations.

The Explosion of Edge Cases

As symbolic systems grew, so did the number of exceptions they needed to handle. Each new rule introduced interactions with existing rules, creating an exponential growth of edge cases.

Managing these interactions became unmanageable. Instead of simplifying decision-making, large rule sets became tangled and opaque, even to their creators. Intelligence did not scale—it fractured.

Difficulty Capturing Human Intuition and Context

Perhaps the deepest challenge was that much of human intelligence is implicit. People rely on intuition, context, and experience that they cannot fully articulate as rules. Symbolic AI assumed that all knowledge could be explicitly defined.

This assumption proved false. Contextual understanding—such as recognizing sarcasm, social nuance, or changing priorities—resisted formalization. Without these elements, symbolic systems remained brittle and shallow.

How These Failures Led to Machine Learning

The struggles of symbolic AI forced researchers to rethink their assumptions. Instead of trying to encode intelligence directly, a new question emerged: what if machines could learn patterns from data instead of relying on handcrafted rules?

This shift marked the machine learning emergence. Rather than defining every step, researchers allowed systems to infer relationships by analyzing large datasets. These approaches handled uncertainty better, adapted to new situations more easily, and scaled with increased data and computing power.

This transition did not happen overnight, nor did it solve all problems. But it addressed many of the core limitations that symbolic AI could not overcome. The broader historical development of AI, including this shift, is explored in the article on the History and Evolution of Artificial Intelligence, which places symbolic AI within its larger context.

Conclusion: A Necessary Turning Point in AI Development

Symbolic AI was an essential step in artificial intelligence research, but it could not scale to the complexity of the real world. Its reliance on rigid rules, inability to manage uncertainty, and failure to capture human intuition exposed the limits of rule-based intelligence.

These limitations were not failures of imagination—they were lessons. By revealing what could not be easily formalized, symbolic AI paved the way for data-driven approaches that define modern AI. Understanding this transition helps explain why today’s systems look the way they do—and why human judgment remains essential alongside them.

References & Further Reading

- Stanford University — The Physical Symbol System Hypothesis: Status and Prospects

- Encyclopaedia Britannica — Expert Systems

- Massachusetts Institute of Technology (MIT) — DENDRAL: A Case Study of the First Expert System

- U.S. National Library of Medicine (PubMed Central) — MYCIN: A Knowledge-Based Computer Program Applied to Infectious Diseases

- Stanford University — Expert Systems: Principles and Practice

- ScienceDirect — Of Brittleness and Bottlenecks: Knowledge Acquisition for Expert Systems